When I started this most recent position, the decision to go to the cloud was already made. After 3+ years, I have learned a lot and want to share some of the things with you. I want to do a series of cloud posts and the first one is a bit of history which was helpful for me for several reasons:

- It helps me understand where some of the products we see today came from

- I lived through most of it - because I’m old

- I like to pretend I’m @Bart_Elia writing novellas on long plane flights - only from my kitchen.

Over the next few posts, I want to talk about the things a company should consider before they move to the cloud. If you have any specific questions or topics, please feel free to send them my way: mwonsil at perceptron dot com. But for now, a little background.

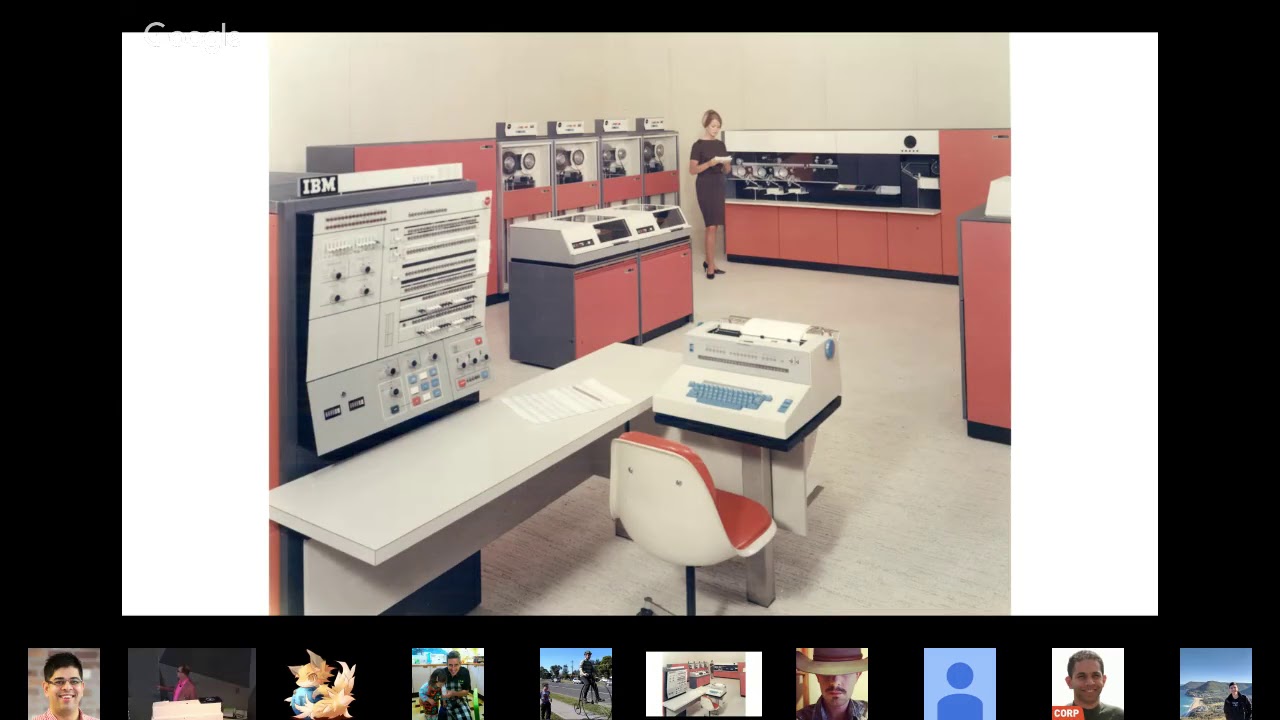

In the beginning, computing power was expensive. Only very large organizations could afford mainframes. They were large and required a lot of care. To offset the expense of these systems, people would often sell the unused capacity. This was called Time Sharing. People would buy excess time during off-peak hours.

The companies that owned these expensive mainframes, couldn’t even afford a second one to have as a development system or a test system. In 1972, IBM created virtual machine software to run more than one operating system on their large computers.

Over time, companies like DEC, HP, IBM, Prime, Wang, Data General, and Apollo started to deliver minicomputers to bring computing power to more people. The minicomputer was smaller and more affordable. For both mainframes and minicomputers, people used a device called a terminal to communicate with the computer system.

In 1983, DEC added the ability to cluster several of these computers together to get even more power and add redundancy. The idea of clustering would become very important to the future of Cloud Computing.

Computers continued to get smaller and eventually there were computers on everybody’s desk. They were called microcomputers. These computers replaced the terminals and used software to emulated the physical terminals. The microcomputer started to get more powerful. So powerful, they started to replace the minicomputers as servers.

The servers were much less expensive than a minicomputer, so much so that we began to see “server sprawl” in the computer room. It seemed every application required dedicated servers with different operating systems and other services with specific versions, but the price of several servers was still cheaper than the minicomputers they replaced. However, the management of more and more servers (licenses, electricity, cooling, etc.) was starting to climb.

Over 25 years since IBMs original virtual machine, the movement of virtualization begins again. In 1999, VMWare releases VMWare Desktop allowing users to run multiple operating systems on a microcomputer. A few years later, the Linux community released several virtualization strategies, but these were different. Instead of virtualizing the entire operating system, they allowed the operating system to create “containers” which would run in a separated space than other containers. Each container had the libraries required to run their application and had limited access to CPU, memory, and even have their own IP address. With the ability to spin up many containers, companies had to create container managers. Several companies created container management software but in 2013, Docker won a lot of the mind-share because they created a whole ecosystem around containers from creation to deployment. In 2017, an opensource project called Kubernetes became the standard for container management and is now supported by Google, Microsoft, and Amazon. Several companies came out with virtualization software for managing servers.

The power of the Cloud comes from the combination of virtualization and clustering. Data Centers can combine hundreds of computers in order to run millions of containers and operating systems at the same time. The clustering software provides redundancy and gives data centers the ability to adjust scale on-the-fly. With the drastic reduction of the price of storage and some powerful automation, this is the infrastructure that makes Cloud Computing what it is today.

In upcoming posts, we’ll discuss what products cloud companies offer, what should a company think about if they are considering moving to the cloud, and what I have experienced with Epicor’s cloud solutions and what that future may hold.