How to set up a question answering bot for any set of PDF files

1. Getting an OpenAI API Key

a) Navigate to https://platform.openai.com and create an account if you don’t have one.

b) Click on your user icon on the top right and click on Manage Account

c) Click on the “Billing” tab

d) Click on the “Usage Limits” item and set a desired hard and soft limit

e) Click on the “Payment methods” menu item and add a payment method (if desired)

f) Under the User heading, click on API Keys

g) Create a new secret key, give it a name, and save it somewhere. We will use this later

2. Installing Python

NOTE: For this to work we need python 3.9.16 so we can use FAISS (Fair AI Similarity Search)

a) Open powershell as admin

b) run: Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope Process

c) install pyenv-win with: Invoke-WebRequest -UseBasicParsing -Uri "https://raw.githubusercontent.com/pyenv-win/pyenv-win/master/pyenv-win/install-pyenv-win.ps1" -OutFile "./install-pyenv-win.ps1"; &"./install-pyenv-win.ps1"

d) close and reopen terminal for pyenv command to become available

e) install python 3.9.13 with: pyenv install 3.9.13

f) enable python 3.9.13 for the current shell(you could use the global flag instead to set it system wide): pyenv shell 3.9.13

g) verify it is installed by running : python --version

3. Installing dependencies

NOTE: To install faiss-cpu via conda:

conda install -c conda-forge faiss-cpu

a) Create a new project folder and within it, create another folder called pdfs and a file named requirements.txt with the following content:

aiohttp==3.8.4

aiosignal==1.3.1

async-timeout==4.0.2

attrs==23.1.0

certifi==2022.12.7

charset-normalizer==3.1.0

colorama==0.4.6

dataclasses-json==0.5.7

faiss-cpu==1.7.3

frozenlist==1.3.3

greenlet==2.0.2

idna==3.4

Jinja2==3.1.2

langchain==0.0.143

MarkupSafe==2.1.2

marshmallow==3.19.0

marshmallow-enum==1.5.1

multidict==6.0.4

mypy-extensions==1.0.0

numexpr==2.8.4

numpy==1.24.2

openai==0.27.4

openapi-schema-pydantic==1.2.4

packaging==23.1

pydantic==1.10.7

PyPDF2==3.0.1

PyYAML==6.0

regex==2023.3.23

requests==2.28.2

SQLAlchemy==1.4.

tenacity==8.2.2

tiktoken==0.3.3

tqdm==4.65.0

typing-inspect==0.8.0

typing_extensions==4.5.0

urllib3==1.26.15

yarl==1.8.2

b) Open a terminal or command prompt and navigate to the directory we created in the previous step.

c) Create a new virtual environment to isolate your dependencies using the following command:

python -m venv env

d) Activate the virtual environment by running the following command:

source env/bin/activate

Note: On Windows, the activate command is slightly different:

.\env\Scripts\activate

You should see the word (env) in brackets in your terminal if this was successful

e) Install all the packages that your program requires into the virtual environment by running the following command:

pip install -r requirements.txt

This will install all the packages listed in the requirements.txt file into the virtual environment.

4. Building our program!

a) Create a new file called main.py

b) Add our imports and set our OpenAI API key as an environment variable

import os

import pickle

from pathlib import Path

from PyPDF2 import PdfReader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.chains.question_answering import load_qa_chain

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

c) Define a function to read in all of our PDF Files

# Define a function named read_pdf_texts that takes a folder path as input

def read_pdf_texts(pdf_folder):

# Initialize an empty list to store the extracted text from all PDF files

pdf_texts = []

# Use the Path module to get all the PDF files in the folder specified

for pdf_file in Path(pdf_folder).glob('*.pdf'):

# Open each PDF file in binary mode and read its contents

with open(pdf_file, 'rb') as f:

# Use the PdfReader module to read the contents of the PDF file

reader = PdfReader(f)

# Initialize an empty string to store the raw text extracted from each page

raw_text = ''

# Iterate over all the pages in the PDF file and extract the text from each page

for i, page in enumerate(reader.pages):

text = page.extract_text() # Extract the text from the page

if text: # Check if the extracted text is not empty

raw_text += text # Append the extracted text to the raw_text string

# Append the raw text from the PDF file to the pdf_texts list

pdf_texts.append(raw_text)

# Return the list of all extracted text from the PDF files

return pdf_texts

d) Define our Embeddings: an embedding is a numerical representation of a text or a sequence of words. An embedding is a high-dimensional vector space representation of words or phrases that captures the semantic meaning of the text. In simple terms, an embedding is a way to represent words or sentences in a format that a computer can understand. The goal of creating an embedding is to capture the meaning of a word or phrase in a numerical format, which can then be used by machine learning models for various NLP tasks such as sentiment analysis, text classification, machine translation, etc.

# Construct or load existing embeddings

def get_embeddings():

# Check if the embeddings.pkl file already exists in the current directory

if os.path.isfile("embeddings.pkl"):

# If the file exists, open it in binary mode and load its contents using pickle

with open("embeddings.pkl", "rb") as f:

return pickle.load(f)

else:

# If the file does not exist, create a new OpenAIEmbeddings object and save it to a new file

embeddings = OpenAIEmbeddings()

with open("embeddings.pkl", "wb") as f:

pickle.dump(embeddings, f)

# Return the newly created embeddings object

return embeddings

e) Define our Main method

def main():

# Prompt user to enter path to PDF folder

pdf_folder = input("Enter the path to your PDF folder: ")

# Extract text from all PDF files in the folder

pdf_texts = read_pdf_texts(pdf_folder)

# Define how to split text into smaller chunks

text_splitter = CharacterTextSplitter(

separator="\n",

chunk_size=1000,

chunk_overlap=100,

length_function=len,

)

# Split each PDF's text into smaller chunks and store all chunks in a list

all_texts = []

for raw_text in pdf_texts:

texts = text_splitter.split_text(raw_text)

all_texts.extend(texts)

# Load pre-trained word embeddings

embeddings = get_embeddings()

# Index the text using the embeddings to enable efficient similarity search

docsearch = FAISS.from_texts(all_texts, embeddings)

# Load a pre-trained question answering model

# Note: You can specify the model to use by passing the model_name parameter:

# OpenAI(model_name="text-curie-001"). It will use Davinci by default.

chain = load_qa_chain(OpenAI(), chain_type="stuff")

# Enter an infinite loop to prompt the user for questions and generate answers

while True:

# Prompt user to enter a question

query = input("Enter your question (or 'quit' to exit): ")

# If user enters "quit", break out of the loop and terminate the program

if query.lower() == 'quit':

break

# Find the most similar documents in the index to the user's question

docs = docsearch.similarity_search(query)

# Print the IDs of the most similar documents to the console

print(docs)

# Generate an answer to the user's question using the pre-trained question answering model

# by running the model on the selected documents

answer = chain.run(input_documents=docs, question=query)

# Print the answer to the console

print(answer)

f) Define our entry point

if __name__ == "__main__":

main()

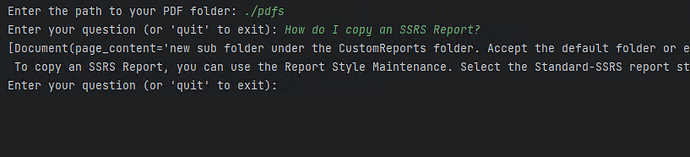

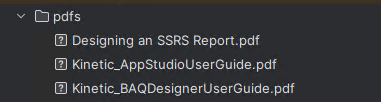

5. Running our program!

Throw some PDFs into a folder:

Run the program like so:

python main.py

Profit??

Enter the path to your PDF folder: ./pdfs

Enter your question (or 'quit' to exit): How do I copy an SSRS Report?

To copy an SSRS Report, you can use the Report Style Maintenance. Select the Standard-SSRS report style or some other SSRS report style, select the link for the SSRS style, and select the Overflow menu and Copy Report Style. This will create a new folder on the server and copy the report data definition (.rdl file) to the new folder.

And the full code to the program:

import os

import pickle

from pathlib import Path

from PyPDF2 import PdfReader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.chains.question_answering import load_qa_chain

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

# Define a function named read_pdf_texts that takes a folder path as input

def read_pdf_texts(pdf_folder):

# Initialize an empty list to store the extracted text from all PDF files

pdf_texts = []

# Use the Path module to get all the PDF files in the folder specified

for pdf_file in Path(pdf_folder).glob('*.pdf'):

# Open each PDF file in binary mode and read its contents

with open(pdf_file, 'rb') as f:

# Use the PdfReader module to read the contents of the PDF file

reader = PdfReader(f)

# Initialize an empty string to store the raw text extracted from each page

raw_text = ''

# Iterate over all the pages in the PDF file and extract the text from each page

for i, page in enumerate(reader.pages):

text = page.extract_text() # Extract the text from the page

if text: # Check if the extracted text is not empty

raw_text += text # Append the extracted text to the raw_text string

# Append the raw text from the PDF file to the pdf_texts list

pdf_texts.append(raw_text)

# Return the list of all extracted text from the PDF files

return pdf_texts

# Define a function named get_embeddings

def get_embeddings():

# Check if the embeddings.pkl file already exists in the current directory

if os.path.isfile("embeddings.pkl"):

# If the file exists, open it in binary mode and load its contents using pickle

with open("embeddings.pkl", "rb") as f:

return pickle.load(f)

else:

# If the file does not exist, create a new OpenAIEmbeddings object and save it to a new file

embeddings = OpenAIEmbeddings()

with open("embeddings.pkl", "wb") as f:

pickle.dump(embeddings, f)

# Return the newly created embeddings object

return embeddings

def main():

# Prompt user to enter path to PDF folder

pdf_folder = input("Enter the path to your PDF folder: ")

# Extract text from all PDF files in the folder

pdf_texts = read_pdf_texts(pdf_folder)

# Define how to split text into smaller chunks

text_splitter = CharacterTextSplitter(

separator="\n",

chunk_size=1000,

chunk_overlap=100,

length_function=len,

)

# Split each PDF's text into smaller chunks and store all chunks in a list

all_texts = []

for raw_text in pdf_texts:

texts = text_splitter.split_text(raw_text)

all_texts.extend(texts)

# Load pre-trained word embeddings

embeddings = get_embeddings()

# Index the text using the embeddings to enable efficient similarity search

docsearch = FAISS.from_texts(all_texts, embeddings)

# Load a pre-trained question answering model

# Note: You can specify the model to use by passing the model_name parameter:

# OpenAI(model_name="text-curie-001"). It will use Davinci by default.

chain = load_qa_chain(OpenAI(), chain_type="stuff")

# Enter an infinite loop to prompt the user for questions and generate answers

while True:

# Prompt user to enter a question

query = input("Enter your question (or 'quit' to exit): ")

# If user enters "quit", break out of the loop and terminate the program

if query.lower() == 'quit':

break

# Find the most similar documents in the index to the user's question

docs = docsearch.similarity_search(query)

# Print the IDs of the most similar documents to the console

print(docs)

# Generate an answer to the user's question using the pre-trained question answering model

# by running the model on the selected documents

answer = chain.run(input_documents=docs, question=query)

# Print the answer to the console

print(answer)

if __name__ == "__main__":

main()