we are using net.ctp windows bindings.

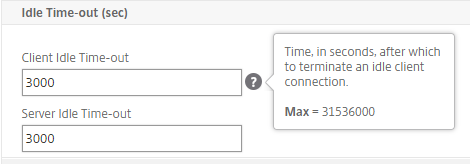

**we have two type of time out: **

- client-Idle timeOut set to 3000 seconds (now changed to 10200)

- Server-Idle timeOut set to 3000 seconds (now changed to 10200)

For the retries we didn’t observe any most of the traces if not all are showing 0, we will keep it monitored and check.

But in random time we get the below exception on the client traces:

Blockquote

System.ServiceModel.CommunicationException: The socket connection was aborted. This could be caused by an error processing your message or a receive timeout being exceeded by the remote host, or an underlying network resource issue. Local socket timeout was ‘02:50:00’. —> System.IO.IOException: The write operation failed, see inner exception. —> System.ServiceModel.CommunicationException: The socket connection was aborted. This could be caused by an error processing your message or a receive timeout being exceeded by the remote host, or an underlying network resource issue. Local socket timeout was ‘02:50:00’. —> System.Net.Sockets.SocketException: An existing connection was forcibly closed by the remote host

at System.Net.Sockets.Socket.Send(Byte buffer, Int32 offset, Int32 size, SocketFlags socketFlags)

at System.ServiceModel.Channels.SocketConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout)

— End of inner exception stack trace —

at System.ServiceModel.Channels.SocketConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout)

at System.ServiceModel.Channels.BufferedConnection.WriteNow(Byte buffer, Int32 offset, Int32 size, TimeSpan timeout, BufferManager bufferManager)

at System.ServiceModel.Channels.BufferedConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout)

at System.ServiceModel.Channels.ConnectionStream.Write(Byte buffer, Int32 offset, Int32 count)

at System.Net.Security.NegotiateStream.StartWriting(Byte buffer, Int32 offset, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.NegotiateStream.ProcessWrite(Byte buffer, Int32 offset, Int32 count, AsyncProtocolRequest asyncRequest)

— End of inner exception stack trace —

at System.Net.Security.NegotiateStream.ProcessWrite(Byte buffer, Int32 offset, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.NegotiateStream.Write(Byte buffer, Int32 offset, Int32 count)

at System.ServiceModel.Channels.StreamConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout)

— End of inner exception stack trace —

Blockquote

Server stack trace:

at System.ServiceModel.Channels.StreamConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout)

at System.ServiceModel.Channels.StreamConnection.Write(Byte buffer, Int32 offset, Int32 size, Boolean immediate, TimeSpan timeout, BufferManager bufferManager)

at System.ServiceModel.Channels.FramingDuplexSessionChannel.OnSendCore(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.TransportDuplexSessionChannel.OnSend(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.OutputChannel.Send(Message message, TimeSpan timeout)

at System.ServiceModel.Dispatcher.DuplexChannelBinder.Request(Message message, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object ins, Object outs, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation)

at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)

Blockquote

Exception rethrown at [0]:

at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

at Ice.Contracts.ReportMonitorSvcContract.GetRowsKeepIdleTime(String whereClauseSysRptLst, Int32 pageSize, Int32 absolutePage, Boolean& morePages)

at Ice.Proxy.BO.ReportMonitorImpl.GetRowsKeepIdleTime(String whereClauseSysRptLst, Int32 pageSize, Int32 absolutePage, Boolean& morePages)

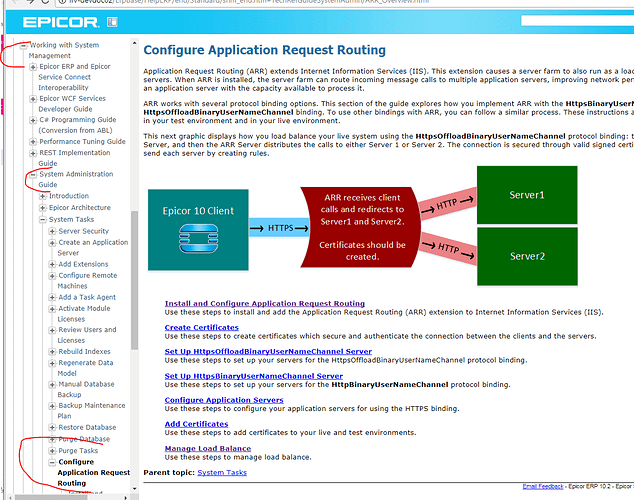

For the ARR configuration previously we used to have packets distributed on both servers, now the load balancing mechanism is that once the client contact the Load Balancer he will be assigned to one App server to serve all his sessions and this should some improvements.

But I’m looking to see if there is any specific recommendation or best practices on hat required to be on hardware Load Balancers to ensure smoot traffic flow and avoid performance issues.