Hey folks,

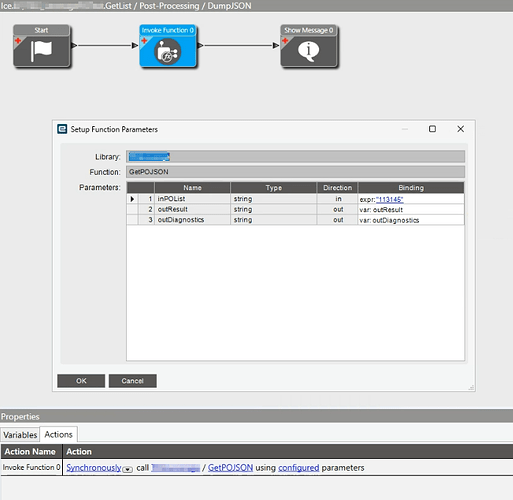

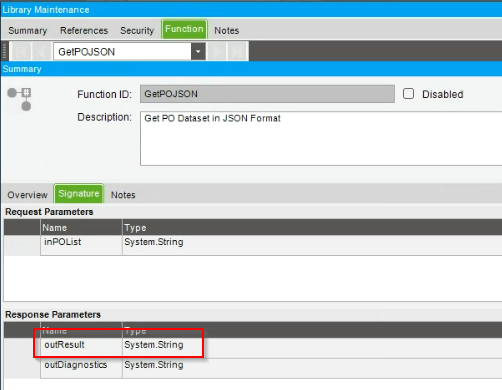

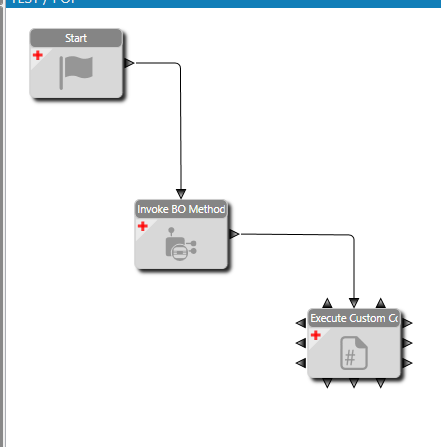

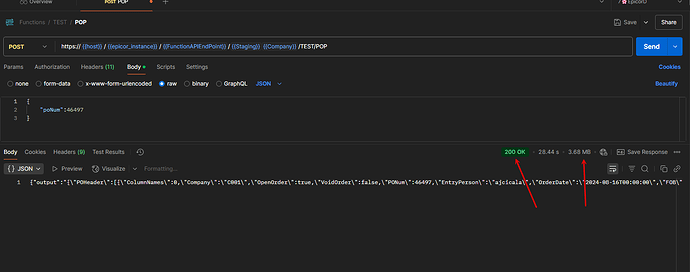

I have an Epicor function that bundles up a PO dataset and converts it to JSON.

It works fine until the PO data gets too big, I think.

I can get it to work until the returned JSON object gets upwards of 47k (guessing a 50k limit), and then I get an error message something like the one below.

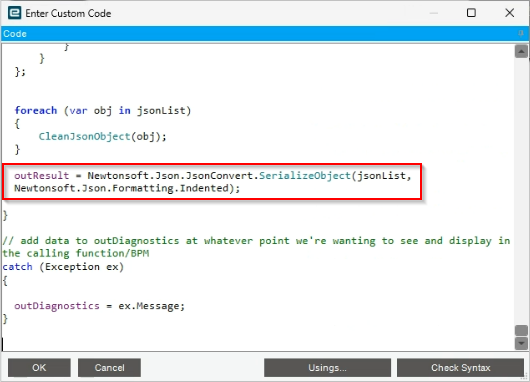

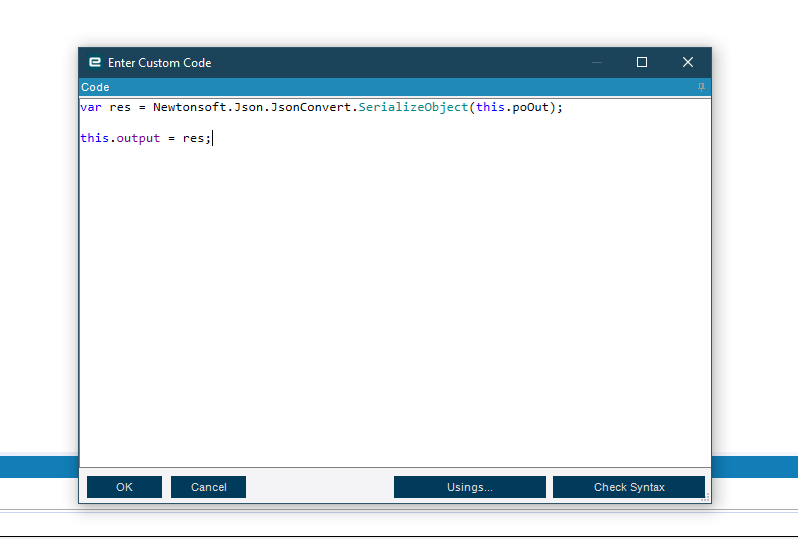

Here’s the serialization statement:

outResult = Newtonsoft.Json.JsonConvert.SerializeObject(jsonList, Newtonsoft.Json.Formatting.Indented);

It’s returned to the caller as a system string.

Note the error message references ‘Context.BpmData[0].Character17’. I’m guessing it’s using that data as a workspace. Maybe I’m using it up?

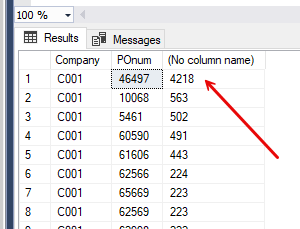

Single POs start hitting errors around five receipt lines (and related records). I’m including receipts and invoices at the line and release level, so there’s a lot of duplication.

I can get four or five POs returned with single lines and receipts before the crash.

I could probably squeeze a bit of room out by not indenting, but don’t think it would get me too far. Data requirements not my own.

Any experience doing something like this? Anyone hit size limits? Hints, remedies, random suggestions?

Thanks,

Joe

Application Error

Exception caught in: Newtonsoft.Json

Error Detail

============

Message: Unterminated string. Expected delimiter: ". Path 'Context.BpmData[0].Character17', line 1, position 351.

Program: Newtonsoft.Json.dll

Method: ReadStringIntoBuffer

Client Stack Trace

==================

at Newtonsoft.Json.JsonTextReader.ReadStringIntoBuffer(Char quote)

at Newtonsoft.Json.JsonTextReader.ParseProperty()

at Newtonsoft.Json.JsonTextReader.ParseObject()

at Newtonsoft.Json.JsonTextReader.Read()

at Ice.Api.Serialization.JsonReaderExtensions.ReadAndAssert(JsonReader reader)

at Ice.Api.Serialization.IceTableConverter.CreateRow(JsonReader reader, IIceTable table, Lazy`1 lazyUDColumns, JsonSerializer serializer)

at Ice.Api.Serialization.IceTableConverter.ReadJson(JsonReader reader, Type objectType, Object existingValue, JsonSerializer serializer)

at Ice.Api.Serialization.IceTablesetConverter.ReadJson(JsonReader reader, Type objectType, Object existingValue, JsonSerializer serializer)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.DeserializeConvertable(JsonConverter converter, JsonReader reader, Type objectType, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.SetPropertyValue(JsonProperty property, JsonConverter propertyConverter, JsonContainerContract containerContract, JsonProperty containerProperty, JsonReader reader, Object target)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.PopulateObject(Object newObject, JsonReader reader, JsonObjectContract contract, JsonProperty member, String id)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateObject(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateValueInternal(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.Deserialize(JsonReader reader, Type objectType, Boolean checkAdditionalContent)

at Newtonsoft.Json.JsonSerializer.DeserializeInternal(JsonReader reader, Type objectType)

at Epicor.ServiceModel.Channels.ImplBase.ProcessJsonReturnHeader[TOut](KeyValuePair`2 messageHeader)

at Epicor.ServiceModel.Channels.ImplBase.SetResponseHeaders(HttpResponseHeaders httpResponseHeaders)

at Epicor.ServiceModel.Channels.ImplBase.HandleContractAfterCall(HttpResponseHeaders httpResponseHeaders)

at Epicor.ServiceModel.Channels.ImplBase.CallWithMultistepBpmHandling(String methodName, ProxyValuesIn valuesIn, ProxyValuesOut valuesOut, Boolean useSparseCopy)

at Epicor.ServiceModel.Channels.ImplBase.Call(String methodName, ProxyValuesIn valuesIn, ProxyValuesOut valuesOut, Boolean useSparseCopy)

at Ice.Proxy.BO.DynamicQueryImpl.GetList(DynamicQueryDataSet queryDS, QueryExecutionDataSet executionParams, Int32 pageSize, Int32 absolutePage, Boolean& hasMorePage)

at Ice.Adapters.DynamicQueryAdapter.<>c__DisplayClass45_0.<GetList>b__0(DataSet datasetToSend)

at Ice.Adapters.DynamicQueryAdapter.ProcessUbaqMethod(String methodName, DataSet updatedDS, Func`2 methodExecutor, Boolean refreshQueryResultsDataset)

at Ice.Adapters.DynamicQueryAdapter.GetList(DynamicQueryDataSet queryDS, QueryExecutionDataSet execParams, Int32 pageSize, Int32 absolutePage, Boolean& hasMorePage)

at Ice.UI.App.BAQDesignerEntry.BAQTransaction.TestCallListBckg()

at Ice.UI.App.BAQDesignerEntry.BAQTransaction.<>c__DisplayClass226_0.<BeginExecute>b__0()

at System.Threading.Tasks.Task.InnerInvoke()

at System.Threading.Tasks.Task.Execute()