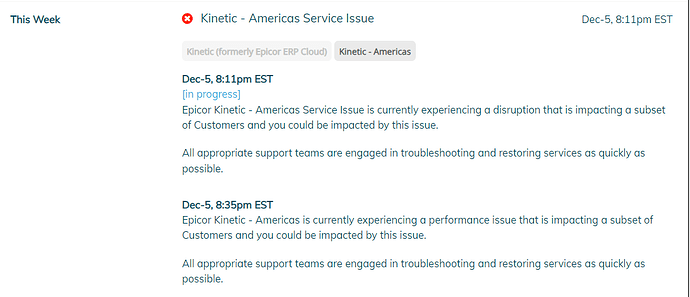

We went down at 4pm Pacific and called support immediately. It took them over an hour to post the outage and now they are calling it a performance issue? Its not a performance issue when your transaction logs are full and nobody can even log in. Going on two hours now.

Alisa, how did this go in the end?

After being down for over 2 hours they finally got us back up again, but no explanation as to how they could allow this to happen. It’s pretty outrageous imo. Managing the transaction log backups is so very fundamental to hosting a sql server database.

In the end we’ll all be forced to the cloud just because consumer apps moved the market to the point that on-prem hardware and OS development stops, while enterprise apps are still not nearly ready. Consumer apps are nowhere near as critical and the cloud is built for them. For example, my bank’s website has glitches every couple of weeks - if my Epicor did that I’d be out of a job.

It’s depressing isn’t it?

On the bright side it is not your problem to urgently figure out what happened, which database caused log overflow and what needs to be done to fix it asap ![]()

I never would have allowed the situation to occur in the first place.

Sounds like they should hire you for the cloud team ![]()

I think Alice was in Epicor before, right?

Disclaimer: I have zero knowlwedge of what happened, but I have imagination.

So imagine, you left home after the working day while one of your coworkers works on some function that opens transaction and then goes through some long list of operations from the beginning of the year and calls some heavy method that also change a lot of stuff. All this is written into the log and it cannot be cleared until the very first trasaction is commited or rollbacked. In this case only infinite amout of disk will leave you out of trouble.

Ancient history ![]()

Come on Alisa,

Sh*t happens, it happens to you and to me daily. Yesterday our Automation System broke cause we hit the wrong checkbox while working on something else a couple of weeks ago. One wrong checkbox did nothing for 3 weeks (it started a countdown that nobody knew existed) then yesterday boom nothing works.

A critical automation didn’t run and several orders were behind. None of us wanted that to happen but it did, it took us a few hours and a lot of frustrating phone calls to figure out we had checked the wrong box 3 weeks ago and it had triggered a 3 week “trial” that broke our license. We corrected it as quickly as we could but it still took a couple of hours to figure out.

I don’t think is fair to say you would have never allowed it to occur in the first place (unless you have the power to predict the future) crap happens and we can hope they have now put something in place to prevent it from happening again, but nobody can predict everything that will go wrong.