I found this talk about changing our thoughts about the software development lifecycle very compelling…at least for DevOps nerds.

thanks Mark, that’s very interesting.

I have a sense of something just beyond my grasp, in the application of this kind of thing (whether SDLC, DevOps or her “revolution” model) to a company that is producing hard goods but constantly developing its software ecosystem.

I wouldn’t say she’s wrong on any counts, and yet she, like just about everything out there on development work, doesn’t really address the management of business goals in the USE of the software product as opposed to the SALE of the software product.

While software companies are increasingly taking customer needs into account, they still need to focus on their own business goals, and when those don’t align with customers’ there’s a conflict where (in my opinion) the customer loses.

The same applies to a hard-goods manufacturer, of course. There’s an analogy with designing your hard product for the end user, versus designing your product as a business opportunity for your distributor. The latter will likely create more profits but the former might be better - and increasingly absent in today’s markets - for the actual consumer.

I haven’t found much out there, neither from Epicor nor in the devops nerdiverse, on architecting and developing a company’s internal software ecosystem, and I’d really like to understand that in a systematic, observable, visual way.

This was the position of Jeff Bezos on how to do that:

So you NEVER hit a database directly, never talk to a mail server directly, etc…

hm, that sums it up nicely

APIs are a start, and they need to secured. The next thing that has emerged is Event Sourcing to orchestrate the APIs.

interesting, so we’d (1) limit access to the system to API access only; (2) specify, I guess? the only allowed locations for executable programs, (3) log any events that occur in those locations, (4) implement devops procedures for actively making changes to those locations

Something like that? Or am I missing your point?

If I were making a checklist, it would go like:

-

Understand the goals of the company first and foremost. Always, always, always. Don’t let technology or solutions drive IT strategy.

-

Knowing the goals, identify the resources required for business continuity and prioritize them.

-

Establish an identity system that identifies people, applications, and devices. Location doesn’t matter nearly as much in today’s connected world.

-

(The hard part) Determine: who, what, when, where, and why an identity requires access to a resource.

-

NOW use APIs to control access to services.

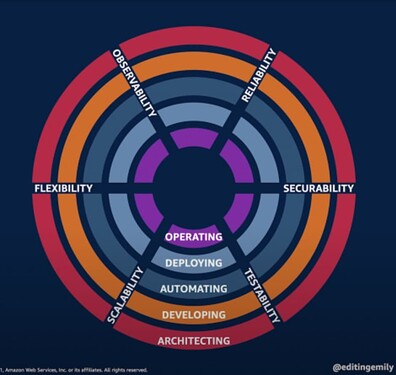

Here’s where the new development lifecycle comes into play. Like Agile and other methodologies that aren’t Waterfall, requirements for applications are fluid. Rarely can one know the actual requirements before starting to code. We may know what people ask for and code for that but often our customers do not know what they need or they leave out important details (compliance, timing, etc.) And even when we do know, things change. This speaks to the Flexibility spoke.

A current example is all of the trouble we have with sending emails from Epicor:

- Controlling when they are sent

- Controlling who should get them (Not enough space for several addresses)

- Content formatting (Templates)

- Conditionally sending emails (testing, reprinting, …)

- Attachment handling (naming, combining,

- Ability to preview before send

- Handling bounces

- Handling SMTP Errors

Burying all these responsibilities inside of Epicor doesn’t make sense. For one, all of Epicor products need to do these things. Why duplicate this code for each and every product? Make notification a service and pull all the complexity out of each package.

And while we’re at it, why email? Why not send documents to a repository and let people subscribe to the documents they have permission to see and get notified (Teams, Slack, Webhook, etc.)?

I’m getting into the weeds but my point is that we should think about those six spokes in all of our roles: architects, developers, and operations. Abstracting the notification service, it becomes more flexible and reliable if I can swap out an SMTP provider. It becomes more secure when I can use the best security methods available (TLS 1.2+), it becomes more scalable if I add STMP servers or services to send high volume, it is easier to see each process (observable) because we split up message data, message formatting, attachment processing, message delivery.

To get a better feel for this, check out these IT Revolution podcast episodes from Gene Kim (Mr. DevOps) which talk about how Walmart is using Event Sourcing and functional programming for their website.

ok, very useful and interesting. I did the first podcast and oof, a lot to take in at once. Suddenly though, if nothing else I’m starting to see how the ability for data to flow, whether human-consumable or trigger-driving, could be the real bottleneck even in a small business - if not to throughput, probably still to growth and scalability. And quite likely to throughput too, as decisions and activities depend on it.

But wow, out of my depth.

Nah. You can totally do this. The functional terminology is tough to get through but the idea about queueing transactions and THEN applying them makes a lot of sense. The second podcast is conversational and Gene asks good questions. It gives you the capability to recover easier, replay, test, etc. I heard a podcast about how Stack Overflow uses CQRS (Command Query Request Separation) to make it lightning fast. It’s not a pattern to use everywhere but when called for, it works well.

In my quest to steal more of your free time… ![]()

I am big fan of the ITIL Framework. We could all just adopt that and life becomes peachy

Bigger fan of ITIL 4 tho…but yes. It’s a nice framework that fits in nicely with Digital Transformation and Zero Trust. It’s like they planned it that way!

what does this look like?

edit - even as I hit Reply, I realize this is the crux of the matter for us. It must be some form of Classes and Instances but I haven’t heard this particular step spelled out before. What might that look like? Is there a standard?

It looks cloudy. (You walked right into this one! ![]() )

)

https://azure.microsoft.com/en-us/services/active-directory/

OAuth is the standard and OpenID Connect extends it.

wow. Dad jokes and now this

this is an great collection, thank you very much

Note At this time, Epicor only interfaces with Azure Active Directory. Until we hear otherwise, you may want to factor that into any decision about choosing an Identity Provider.

Yes, our 10.2.700 plays well with conventional active directory, but in building my first K21 on-prem it seems that doesn’t work. The docs say Azure AD, and until now I thought they worked similarly. So far, even with tcp/windows still there, I’ve only been able to log in with a username and password, both on the server and in the browser. Upgrading our domain control to Azure AD is probably next.

There is no upgrading AD to AAD. They are two different beasts and coexist. What people do is move to a hybrid model of identity where AD and AAD sync common elements. This lets you keep AD and start getting benefits of AAD (MFA, Application Registration, Manage non-WIndows Devices, Passwordless, Managed Identities, …)

ah, interesting, that makes sense, thanks for the insight.