Hey all,

I think we’ve already projected a lot of this info to you via a support case (which i hope has been passed on by now  ) but just in case here’s a direct discussion on it.

) but just in case here’s a direct discussion on it.

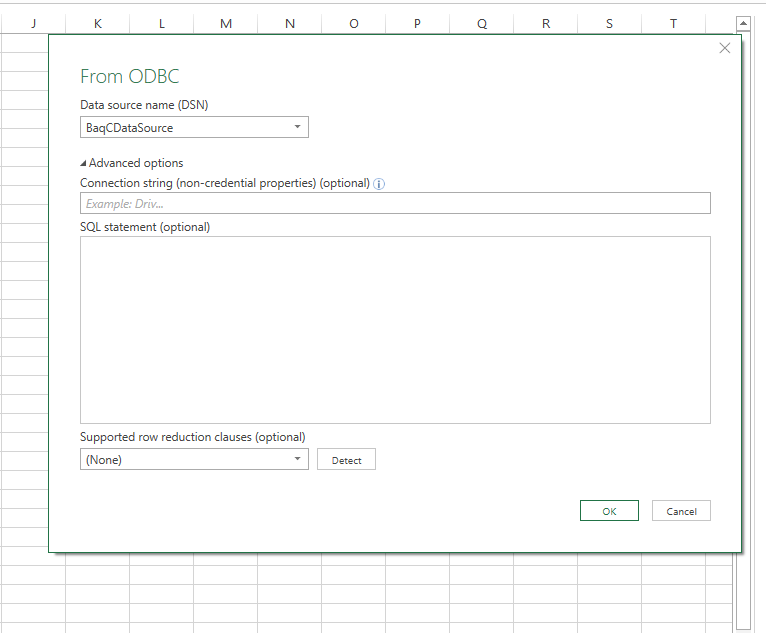

So here’s the deal with excel and power query clients + our API Keys. Long story short we needed to add api keys which don’t themselves give you any rights to do anything, but still require a user login so that we can restrict and monitor access by application (say mobile crm) what can be done as well as by user. There is another way to build api keys where the key itself is all you need to log in and make calls and we have that as a maybe on the future roadmap.

The Microsoft folks behind the query component used in excel (and other things) made an assumption that API keys are always authorization keys (no login) and throw an error if you couple up a login + an api key because they think two different login methods at the same time isn’t valid. In our services that’s not correct. We found that out relatively late in our v2 release testing process.

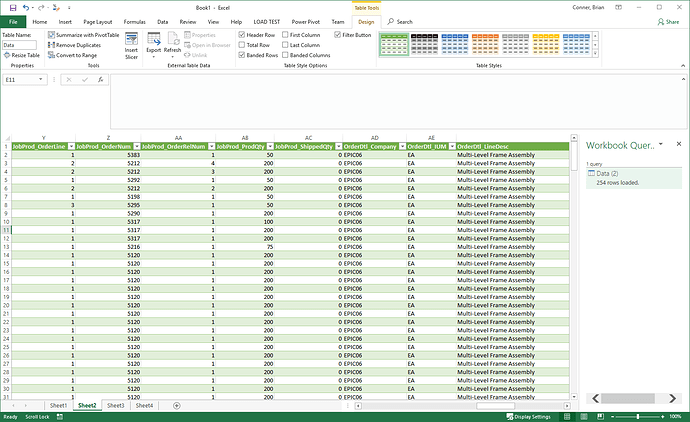

We can’t drop the login + key behavior which we’re going to need for applications that log in with different user accounts and act with those user’s rights, nor can we drop required keys so instead we opted to (for now) direct folks back to api v1. While application integrations are our primary use case for REST we do care about other applications that know how to connect & consume so we do have a few options that may enable this in the future, but have not yet done one. In the mean time the answer is still - use v1 though we understand many of the newer odata consuming apps assume odata v4 which leaves you in a bit of a catch22.

The options just fyi are

-

Add security-imbued api keys. This is what that excel connector is expecting and was on our backlog but we have some security concerns around it so have not yet pulled the trigger. Basically these are erp service accounts bound to an api key + required restrictions on what apis they can call. Easy for api <-> api integration but not appropriate for apps which is what our keys are tuned for now.

-

Add an alternate way to pass the key that wont trigger this bad assumption specifically for these connectors such as accepting the key in the url in some other way.

-

Stop trying to use our existing REST as an ETL source and create our own first class connectors. I will say we’re very aware that our rest services are really not built with bulk ETL or ongoing streaming ETL in mind so even if you make it work it’s still not a great solution in that it’s quite inefficient, but today it’s the only option in SaaS - that won’t be true forever.

Can I ask - what’s your actual data warehouse platform that you’re trying to get data into?