Just close then ..

Beaker is my favorite muppet… namesake notwithstanding. I identify with this guy more.

Have you tried putting specific fields you need into a BAQ and making a simple request? I’s love to know if is any better.

Good question - the answer is “Negative, Ghostrider.”

Having worked with multiple 3rd party systems in my career I am a big proponent of the minimum customization approach. If I can make this work with the stock APIs I will do that.

3rd Party systems such as Epicor, PeopleSoft, Global360 (I think it’s OpenText now), PCMiler, etc. release updates on regular cadences. That leads me to two personal guidelines:

- Avoid customizations. Customizations can impact the application of system updates and require additional testing.

- Insulate domain systems from 3rd party vendor products. Use domain APIs as an insulation layer between your business systems and the 3rd party system. Ensure your domain API has adequate automated regression and acceptance testing, ideally in a CI/CD pipeline.

HornSounder.efxj (3.7 KB)

Refs →

Newtonsoft

OrderHed, OrderDtl, OrderRel

var sw = new Stopwatch();

sw.Start();

var d = new Dictionary<string, object>()

{

["OrderHed"] = Db.OrderHed.Where(x => x.OrderNum == orderNum).ToList(),

["OderDtl"] = Db.OrderDtl.Where(x => x.OrderNum == orderNum).ToList(),

["OrderRel"] = Db.OrderRel.Where(x => x.OrderNum == orderNum).ToList()

};

var tString = JsonConvert.SerializeObject(d, Formatting.Indented);

JObject root = JObject.Parse(tString);

var fieldsToRemove = new[] { "$id", "EntityKey" };

foreach (JArray table in root.Properties().Select(p => p.Value).OfType<JArray>())

{

foreach (JObject row in table.Children<JObject>())

{

foreach (string field in fieldsToRemove)

{

row.Remove(field);

}

}

}

output = root.ToObject<DataSet>();

output2 = $"Processing Time -> {sw.ElapsedMilliseconds.ToString()} ms";

See what that’ll do.

Of course now I remember you’re new to functions.

Import, add your company to the security, save, promote to production.

You can then see it in swagger etc.

I will get back to you after running my other tests - honestly will likely be tomorrow.

It’ll just give you something to play with.

I really want to see the difference in the processing time vs the total time.

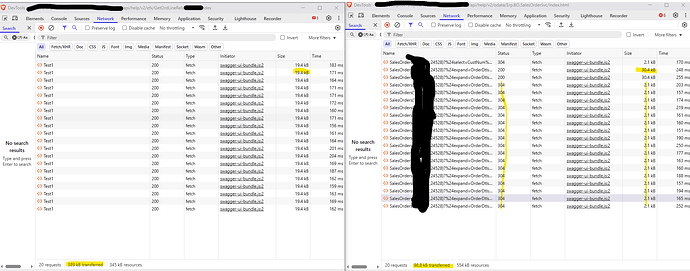

interesting observations:

- they’re about the same perf when not reducing columns

- Function response time is slightly better (even with bigger payloads)

- OData returns 304 UNCHANGED after a couple requests so server cache makes response payload smaller but response time doesn’t change much

- your proc time is 7-8 ms.

- FWIW, my pilot tables have only a handful of small orders

- look forward to seeing results with big orders and smaller payloads

I should really pop some more tests in there to see what works well.

No idea if this is horrible or ok.

A faster fiber connection didn’t make much diff but a larger order (3 lines x 3 rels ea) does seem to slow down the OData $expand (again, even tho it’s appearing to return 304 response) more so than it slows down your function. (your proc times went from 7-8 ms to 9-11ms on this order)

I made a function one time for @utaylor that made like 10k lines or releasess..

I guess I could make one real big lol.

I’m not a function guy (yet) but curious whether System.Linq.Dynamic.Core can be referenced?

Would be neat to see if a dynamic query function with simple $select, $filter, $expand equivalents is faster than OData > WebAPI > EF > Linq > db > EF > WebApi > OData > json or whatever their backend is doing.

something like (AI code):

Function-Like class

using System;

using System.Collections.Generic;

using System.Data;

using System.Diagnostics;

using System.Linq;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

using System.Linq.Dynamic.Core;

public class OrderDataService

{

public (DataSet Data, string Timing) GetOrderData(

int orderNum,

string select,

string expand)

{

var sw = new Stopwatch();

sw.Start();

// Build dynamic query using select and expand

var query = DynamicQueryBuilder.BuildQuery(

Db.OrderHed.AsQueryable(),

select,

expand

);

// Apply filter and execute

var result = query.Where("OrderNum == @0", orderNum).ToDynamicList();

// Wrap in dictionary for serialization

var data = new Dictionary<string, object>

{

["OrderHed"] = result

};

// Serialize and convert to DataSet

var json = JsonConvert.SerializeObject(data, Formatting.Indented);

var root = JObject.Parse(json);

var dataSet = root.ToObject<DataSet>();

var timing = $"Processing Time -> {sw.ElapsedMilliseconds} ms";

return (dataSet, timing);

}

}

DynamicQueryBuilder Class

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text.RegularExpressions;

public static class DynamicQueryBuilder

{// TODO: add filter param parser

public static IQueryable<dynamic> BuildQuery<T>(

IQueryable<T> source,

string select,

string odataExpand)

{

var normalizedExpand = NormalizeODataExpand(odataExpand);

var expandTree = ParseExpand(normalizedExpand);

var projection = BuildProjection(select, expandTree);

return source.Select(projection);

}

private static string BuildProjection(string rootSelect, Dictionary<string, ExpandNode> expandTree)

{

var projection = $"new ({rootSelect}";

foreach (var (navProp, node) in expandTree)

{

var nestedSelect = string.Join(", ", node.SelectFields);

var nestedProjection = node.ExpandFields.Count > 0

? BuildProjection(nestedSelect, node.ExpandFields)

: $"new ({nestedSelect})";

projection += $", {navProp} = {navProp}.Select({nestedProjection})";

}

projection += ")";

return projection;

}

private static Dictionary<string, ExpandNode> ParseExpand(string expand)

{

var result = new Dictionary<string, ExpandNode>();

if (string.IsNullOrWhiteSpace(expand)) return result;

var pattern = @"(\w+)\(([^;()]+)(?:;(.+?))?\)";

var matches = Regex.Matches(expand, pattern);

foreach (Match match in matches)

{

var navProp = match.Groups[1].Value;

var selectFields = match.Groups[2].Value.Split(',').Select(f => f.Trim()).ToList();

var nestedExpand = match.Groups[3].Success ? match.Groups[3].Value : null;

result[navProp] = new ExpandNode

{

SelectFields = selectFields,

ExpandFields = ParseExpand(nestedExpand)

};

}

return result;

}

private static string NormalizeODataExpand(string odataExpand)

{

if (string.IsNullOrWhiteSpace(odataExpand)) return "";

var normalized = Regex.Replace(odataExpand, @"\$select=([^;()]+)", m => m.Groups[1].Value);

normalized = Regex.Replace(normalized, @"\$expand=", "");

normalized = Uri.UnescapeDataString(normalized);

return normalized;

}

private class ExpandNode

{

public List<string> SelectFields { get; set; } = new();

public Dictionary<string, ExpandNode> ExpandFields { get; set; } = new();

}

}

usage:

var service = new OrderDataService();

var select = "OrderNum,OrderDate,CustNum";

var expand = "OrderDtl($select=OrderNum,OrderLine,PartNum;$expand=OrderRel($select=OrderNum,OrderLine,OrderRelNum,ReqDate))";

var (dataSet, timing) = service.GetOrderData(24528, select, expand);

Console.WriteLine(timing);

Unfortunately not. ![]()

Gonna run for a minute there Josh…

Huh? if you’re refering to colapsing large blocks of awesome code (that can’t work cuz Epicor is sandboxed) in order to not clutter the poor OPs thread with said awesome code, then oh ok. ![]()

No he’s saying making 10k lines is gonna take a bit lol.

Sorry. Duh…[Josh goes gets more coffee]

That sounds like a grand idea.

And then afterwards… if you have jobs made to those releases or need to mass change something, it’s going to take a long time too… That’s the problem with the company trying to do this is that the order will suddenly change in the middle of it and they need to re-do jobs linked to the 10,000 lines, or change addresses or whatever.