We went from 10.500.18 to 10.500.31 without a problem. Actually MICR was the only thing causing some issues. If you have MICR, I can give you a patch… other than that; it was minor upgrade, no issues. We have 13 Multi-Company processes, Inter-Company, etc… all was good.

Haso - so I ran your script yesterday and MRP ran fine last night. Of course, it had to run fine the night before too (prior to setting the fill factor) just because it hates me and wants me to question whether this is going to work or not. Maybe it just like it when I check in several times every night to see how it’s doing. It’s lonely. ![]()

I did some reading up on fill factor this morning and I have a question: is it possible there is a typo in your script above - namely, you said 40% but then the script looks to me like it is setting it to only 4%?

PS - Some of the articles I’m reading:

Maybe my fill_factor is set to 4% and not 40% which I assumed because 0 = 100%. Nevertheless = 5 solved my issue but the goal was to match my page count to be as close as possible to JobAsmbl. Perhaps I don’t need to go that low, because in another Thread Raj Tapde from Epicor said I can set it to = 20.

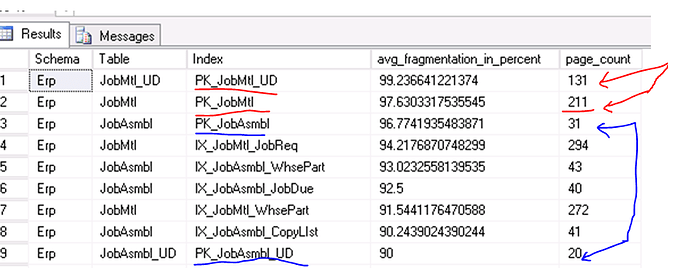

Close Enough Good for JobAsmbl - but JobMtl caused Deadlocks when I had it this way:

So try 40 and not 4% =) and then work your way down… Its all because of the way the _UD index works.

Queries I use to Validate:

SELECT fill_factor, * FROM sys.indexes where name in ('PK_JobAsmbl_UD', 'PK_JobMtl_UD');

SELECT dbschemas.[name] as 'Schema',

dbtables.[name] as 'Table',

dbindexes.[name] as 'Index',

indexstats.alloc_unit_type_desc,

indexstats.avg_fragmentation_in_percent,

indexstats.page_count

FROM sys.dm_db_index_physical_stats (DB_ID(), NULL, NULL, NULL, NULL) AS indexstats

INNER JOIN sys.tables dbtables on dbtables.[object_id] = indexstats.[object_id]

INNER JOIN sys.schemas dbschemas on dbtables.[schema_id] = dbschemas.[schema_id]

INNER JOIN sys.indexes AS dbindexes ON dbindexes.[object_id] = indexstats.[object_id]

AND indexstats.index_id = dbindexes.index_id

WHERE indexstats.database_id = DB_ID() AND dbindexes.[name] LIKE 'PK_JobMtl%' OR dbindexes.[name] LIKE 'PK_JobAsmbl%'

ORDER BY indexstats.avg_fragmentation_in_percent desc

Also for those wondering if this could all be only related to latency on hardware, it’s not. We moved it from VMWare even to a Physical Host and I can replicate the issue on any database when creating JobMtl_UD or JobAsmbl_UD columns and full regening database, even on SSD Drives.

If you have a test environment set it back to 0 or 100 and re-run MRP eventually within 1 or 2 attempts you will get it to crash. Then set it back to 5 or 40 or 20 and run MRP Full Regen multiple times, should be good then

So, I did FillFactor = 40 and here are my fragmentation / page count results now:

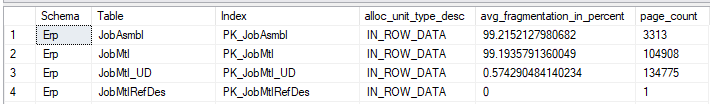

Apparently we have a lot more rows in these tables than the database you are showing above?

Shouldn’t we also be concerned about the other indexes (primary keys) having such a super high fragmentation? I guess there is a difference in that the other primary keys in Epicor seem to be (Unique, Non-Clustered) and these _UD tables are (Clustered)

Guess I might still need to do some more reading.

Well I am able to knock my fragmentation down to 10% but as soon as I run MRP it goes back up to 99%. I think it has to do with the way Epicor has Index Keys set… I pinged @Edge once on twitter about it and he agrees that there is room for improvement. Hopefully Epicor keeps optimizing their indexes.

Over the weekend the evil MRP crashing after an hour of trying to “delete unfirm jobs” returned with no solution in sight. So I spoke with my cousin who knows a thing or two about SQL databases as well, and he reminded me of the awesome power of being able to look at the currently running queries using a sproc like sp_WhoIsActive2 or my-favorite-query.

So I kicked off MRP Full Regen, let it get to the Deleting Unfirm Jobs step, waited a few minutes, and then ran sp_WhoIsActive2 - and there was the culprit, a query that appears to be clearing Transfer Order suggestions and had been running for over 5 minutes already:

delete a

from Erp.TFOrdDtl a inner join Erp.PartDtl c on a.Company = c.Company and a.TFLineNum = c.TFLineNum

inner join Erp.JobHead b on c.Company = b.Company and c.JobNum = b.JobNum where b.Company = 'LEER'

and b.JobClosed = 0 and b.JobFirm = 0

Now I’m having deja vu. I turn this into a SELECT * statement instead of a delete and run it, but I have already guessed how many results there are going to be. Sure enough: zero. But even the select query takes several minutes to run. So I have SQL Query Analyzer show me the execution plan, and there is a table scan and a proposed missing index. I’m in kind of a hurry to get MRP working, so I took the proposed index as is - it might potentially be optimized more or have fewer included columns?

/*

Missing Index Details from SQLQuery3.sql

The Query Processor estimates that implementing the following index could improve the query cost by 61.1133%.

*/

USE [Epicor]

GO

CREATE NONCLUSTERED INDEX LEER_IX_PartDtl_JobNum

ON [Erp].[PartDtl] ([Company],[JobNum])

INCLUDE ([Type],[PartNum],[DueDate],[RequirementFlag],[Quantity],[AssemblySeq],[JobSeq],[OrderNum],[OrderLine],[OrderRelNum],[PONum],[POLine],[PORelNum],[PartDescription],[IUM],[SourceFile],[CustNum],[StockTrans],[FirmRelease],[RevisionNum],[TargetOrderNum],[TargetOrderLine],[TargetOrderRelNum],[TargetWarehouseCode],[TargetJobNum],[TargetAssemblySeq],[TargetMtlSeq],[Plant],[InvLinkNum],[PlantTranNum],[TFOrdLine],[TFOrdNum],[TFLineNum],[SourceDBRecid],[NonPart],[BOMChanged],[BaseQty],[BaseQtyUOM],[InvtyQty],[InvtyQtyUOM],[JobFirm],[PartDtlSeq],[SysRevID],[SysRowID],[ContractID])

GO

Now I can run the select statement and it takes only 10-20 seconds (and still returns zero results). The delete statement takes 2 to 3 minutes, but this is a big improvement over running for an hour and then timing out, killing MRP!

And now MRP is running in about an hour, or 1.5 hours, instead of 3-5 hours!

Can you show me how you did the whoisactive to show the culprit query? I have the feeling we have similar issues. MRP net changes run fine for us but MRP full regen in 3 different companies at 3 different times seems to be crashing. We copy our DB over to different Epicor servers and it runs fine. Currently working with Epicor and we are having trouble finding the solution on this one. we are getting this in MRP runs when it crashes:

An error occurred trying to run a sub-task:

System.TimeoutException: This request operation sent to net.tcp://[servername]/EpicorERP/Ice/Lib/RunTask.svc did not receive a reply within the configured timeout (00:05:00). The time allotted to this operation may have been a portion of a longer timeout. This may be because the service is still processing the operation or because the service was unable to send a reply message. Please consider increasing the operation timeout (by casting the channel/proxy to IContextChannel and setting the OperationTimeout property) and ensure that the service is able to connect to the client.

We’re on 10.2.300.40

I’m sorry if this topic has been dead for awhile but we recently started encountering a problem running full regen when it gets to deleting records from TFOrdDtl. As mentioned above, it hangs on deletions from that table and then finally errors out. I saw the proposed solution above and it involves creating an index for Erp.PartDtl.

The problem is a Non-Unique, Non-Clustered index already exists called ‘IX_PartDtl_JobNum’, but it only contains the following fields:

[Company] ASC,

[JobNum] ASC,

[AssemblySeq] ASC,

[Type] ASC,

[JobSeq] ASC

Besides, I didn’t think Epicor wanted customers redefining their indexes.

I can understand Epicor not wanting you to redefine indexes they have created, however, I have found significant performance gains by analysing the Epicor database and a creating your own additional indexes.

The key thing is to use a common naming convention, so you can easily identify the ones you have created.

Be sure to keep them all stored in a common folder or something too - when you upgrade Epicor significantly (I’m not sure about patches, but definitely service pack level upgrades), the process deletes and recreates all of the DB indexes and your indexes will no longer be around. Now, we’ve taken the approach of leaving that be, and only re-creating the indexes that seem to still be needed to keep Epicor performing well (another example: we ended up needing to create an index on PartMtl (or was it ECOMtl?) to keep Engineering Workbench from freezing up for 3-6 seconds every time you switched from one material record to another).

When adding a new index where one already exists for PartDtl, during the execution of DELETE for MRP what forces the system to use the new index as opposed to continue with the old index?

Is there any credence to adding an index to PartDtl for TFLineNum? The query from above:

delete a

from Erp.TFOrdDtl a inner join Erp.PartDtl c on a.Company = c.Company and a.TFLineNum = c.TFLineNum

inner join Erp.JobHead b on c.Company = b.Company and c.JobNum = b.JobNum where b.Company = 'MYCOMPANY' and b.JobClosed = 0 and b.JobFirm = 0

joins TFOrdDtl to PartDtl on TFLineNum. I see that PartDtl does not have an index for TFLineNum. Is there anything special I would need to do to “instruct Epicor” to use it? I did a Data Regen on the database. Data Dictionary Viewer sees the index I created. I’m just checking with my educated peers to see if I’m overlooking something obvious or making poor assumptions.

I usually only add an index when one is suggested when running with “Show Actual Execution Plan” checked in SSMS. Not saying that is the only good indexes to add, I just haven’t ventured out beyond that “shallow water.” Also, its my understanding that if you add an index that the Query Optimizer in SQL is already finding and suggesting you add, then the Query Optimizer itself will most likely switch to using your added index when running the query or queries that caused the suggestion in the first place. Basically put - nothing you need to do to switch Epicor to using the new index, it’s all handled by the SQL query engine.

Thank you for the feedback. I’ll check and see if the Query Optimizer recommends anything.

I did not get any recommendation for a new index. Furthermore, I realized that there is already an index for the field I was going to make one… it’s just not named the same as the field so I didn’t realize it. It’s called IX_PartDtl_TFLine and I was expecting IX_PartDtl_TFLineNum. So that’s out of the equation now also. I’ve got an active support ticket with EpicCare. We’re checking to see if there’s bad data in our system. Orphaned records, etc.

One thing I noticed that is different in my case than in yours - my query that is stuck is this (has plant references also):

delete a

from Erp.TFOrdDtl a

inner join Erp.PartDtl c on

a.Company = c.Company

and a.TFLineNum = c.TFLineNum

and a.Plant = c.Plant

inner join Erp.JobHead b on

c.Company = b.Company

and c.JobNum = b.JobNum

and b.JobClosed = 0

and b.JobFirm = 0

where

b.Company = @Company

and b.Plant = @Plan