Discussing recovery models, maintenance of transaction log files, and backup schemes can be a complicated topic–and I’ve never met a person that really enjoys it.

So, I have some questions:

- How much data is your company willing to lose if your SQL server fails hard and how much time can you be down while trying to recover?

- Not even a single transaction with no downtime? Possible, but, expensive and relatively complicated.

- Up to a day’s worth of data loss with no urgency on getting back operational? Inexpensive and relatively simple.

- Something between those extremes?

- How much in-house SQL DBA expertise do you have currently / could potentially have now?

- With more expertise more options are available.

- Are you doing anything with your transactional log (LDF) now? ← probably not.

- How much storage space do you have OFF of your SQL server to use for backups?

With those 4 questions answers, one can devise a solution that fits into a company’s operational needs in terms of transaction loss(with complexity increasing with loss of transactions decreasing), downtime, and cost.

Hang in there, I’ll respond to the actual question about LDF eventually

Potential backup strategies:

You want something super simple to implement, can only lose four hours of transactions, super simple to recover from with minimal downtime and disk space is infinite/don’t worry about how many versions of backups you have?

- Full backups every four hours

- Backups should be stored off the SQL server

- Set your recovery model to SIMPLE (as you aren’t doing anything with your transaction log) and ignore the PDT Config check on the RECOVERY model rule.

Not quite right for your needs? Most probably wouldn’t be OK with that much loss.

How about you want something not incredibly complicated (relatively speaking) to implement, can only lose at most one hour of transactions, simple to recover as you’ll have a script to help with the recovery restore with moderate downtime and disk space is less than infinite?

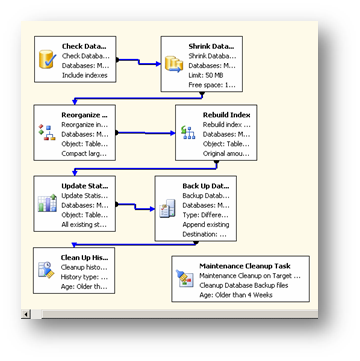

- Full backup every day

- Differential backup 12 hours after/before full backup

- Transaction log backup every hour

- TSQL script to do the restore order already created

- Leave your RECOVERY model at FULL

NOTE: changing this strategy from up to an hour of loss to 10 minutes requires nearly zero effort–just change the frequency of the transaction log backups.

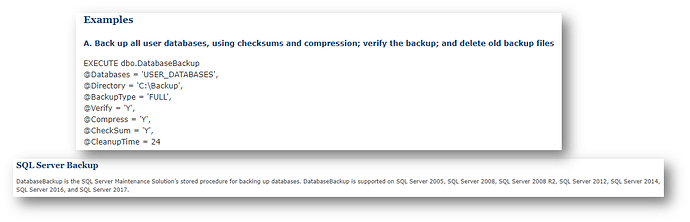

Are there simple ways to implement these backup strategies?

Of course! We live in an era where most routine SQL activities are solved by really knowledgable people to make them easy to implement.

There are lots of 3rd party backup solutions (redgate SQL backup pro, acronis, Litespeed, etc) as well. I don’t have an official opinion on any of them as I haven’t had a professional need for something other than some variation of what is above.

Recovery process:

- Latest full backup (Required).

- Latest differential backup (Optional)

- Unbroken sequence of transaction log backups (Optional).

All transaction logs should be restored with NO RECOVERY option (except for the last one).

- Prevents database from being accessed while restore process is taking place.

SOooOoOOOOooO, to the question of how to shrink your LDF file…

It depends on whether or not you are currently doing anything with your LDF file (transactional log backups, log shipping, replication, etc) or plan on doing anything that requires maintenance to your LDF file. If you are ok with completing some combo of full backups and differentials every X amount of time so you only lose up to X amount of transactions with latest full/latest differential, don’t want to deal with keeping track of an uninterrupted log chain, and have lots of space on a server that isn’t your SQL server, then, just set the recovery model to SIMPLE (and then implement a backup scheme that takes that into account) and simplify your life on the “something broke and I need to do something” front.

DISCLAIMER:

The conventional wisdom is that everyone should use full recovery with frequent transaction log backups to allow for point in time recovery, while shrinking the LDF during backup events. When properly implemented and tested frequently (a backup isn’t good unless it is tested as good), it’s the gold standard but, it has greater than zero implementation and recovery cost. Get a firm handle on what the business needs are, and a solution will present itself based on the 4 questions/parameters at the beginning. Never do more than what is needed to accomplish the goal.

Officially, the PDT config check should be simply followed as each one of those rules was created due of some other person’s misfortune and that rule is now a warning to everyone else so they don’t have to run into that same problem that prompted the rule.

Unofficially, the PDT config check (ERP Analyzer as well) should always be given the benefit of every doubt but if you think you could convince @Bart_Elia, @Edge, @Olga or me that you know why the recommendation is generally good but in your specific use case why a specific rule can be safely ignored, that’s alright. If you do ignore it for very good XYZ reason, remember that literally any performance problem you submit to Support you’ll be forced to hear the analyst tell you to change it though