Until it forgets the context and deletes half of your files ![]() but it is magical and I love how fast it makes me

but it is magical and I love how fast it makes me

Working as designed. ![]()

yes, it is piling up very fast ![]() I would not want to save it as final code.

I would not want to save it as final code.

But for the test script I need to run couple of times and communicate with LLM, database and files on disk it is so faster than to write myself

My wife’s company just had an all hands meeting where it told all their Engineering Staff to come up with “prototypes” of how to use Ai in their product. They scrapped their entire road map and this is their new one.

Literally told go make something with Ai in our product and we’ll pick which one to implement. Just find a problem that needs solving. She is besides herself (sad/frustrated). The mandate is literally “Implement Ai”… whatever that means. ![]()

![]()

![]()

![]()

![]()

EDIT - should have really included below quote for context. Implementing an AI app just for the sake of doing it…not good for an IT person’s nerves.

For our org, I developed a chat application that has multiple functions. Knowledge base, SQL queries (through Azure Relay), web search, and image gen for fun. One reason we opted for this route is cost control and observability. Most providers expect enterprise subscriptions to grant access to usage information for your own org, which means you could be throwing $30 per user a month into a fire when they use $0.05 a week. Your heaviest users, like developers, will cost you more than the subscriptions.

Streaming is key for users to understand why the request appears to be taking forever.

GPT-5’s recent launch was aimed at reducing hallucinations, deception, while increasing safety and performance across the board. The ability for these AIs to call tools (local functions or MCP servers, and provider functions) has improved drastically starting with o4-mini and o3. GPT-5 is the next level of this.

AI models, mostly proprietary ones, usually hallucinate because they do not have the information, but you’ll be amazed at the results when provided all relevant info. Prompting matters and evaluation matters in this non-deterministic setting.

Second @TomAlexander 's comments. Github Copilot in VSCode is a dream - pleasantly suprized at the improvement over just a few months ago - well worth the $10.

Some users have enabled M365 Copilot add-on so it can learn on Email, SharePoint, etc. Haven’t checked in with them or tried it myself, but, while it seems expensive at $30, if it saves a user an hour a month, I figure we break even. While we’d adopt more widly if the cost came down, it’s a fairly easy case to make for customer-facing employees and developers, IMO.

I find in Github Copilot for VSCode, it sometimes stops applying diffs to my active files but doesn’t forget. Especially when you ask it to generate md files documenting the project, To Dos, ideas, etc. If I sense it wandering, we go back to the docs and it’s back on track. I ask it to suggest updates to docs after most sessions and when I come back prompt ‘where’d we leave off’?

Third. And a year for $100.

I saved the console log from F12 into a file, opened it in VSCode, and asked Copilot to summarized the events:

Based on my analysis of the log file, here’s a summary of the key events that occurred:

ERP Application Part Management Workflow Summary

This log captures a comprehensive workflow in an ERP application for managing part information, specifically for Part Number R1037. Here are the main events:

1. Session Initialization

- Debug logging was enabled (hotkey 15 executed)

- EP-binding logging was toggled off

2. UI Clear Operation

- User initiated a “Clear UI” action with confirmation dialog

- System cleared multiple datasets:

Part,KeyFields,KeyFieldParams,GlbPart,PartUOMPlasticTax - UI was reset and navigation occurred to the “Details” page

3. Part Number Processing (R1037)

- Part Cross-Reference Lookup: System called

GetPartXRefInfoservice to validate part number - Part Validation: Confirmed part number “R1037” exists and matches user input

- Part Data Retrieval: Called

GetByIDservice to fetch complete part information

4. Data Loading Sequence

The system loaded various related data for part R1037:

- Plant Authorization: Set authorized plants to “MfgSys”

- Part-Plant Relationships: Configured plant-specific settings

- Cost Data: Loaded part cost information via

PartCostSearchSvc - Bin Information: Retrieved warehouse bin data via

PartBinSearchSvc - UOM & Tax Data: Fetched Unit of Measure and plastic tax information

- Image Data: Attempted to load part images

- Global Part Settings: Checked for global part configurations

5. Key Calculations & Settings

- COO (Country of Origin) Totals: Calculated percentage totals (both set to 0)

- Conversion Factor: Set to “1 BTL * 1 = 1.0000000 LB” (Bottle to Pound conversion)

- BackFlush Setting: Determined part is not set for backflushing (false)

- Inventory Attributes: Configured filters for inventory tracking

6. Final State

- Part R1037 successfully loaded with all related data

- UI re-enabled for user interaction

- System ready for further part management operations

Notable Technical Details

- Multiple REST API calls to ERP services (PartSvc, ImageSvc, PartCostSearchSvc, etc.)

- Extensive data binding operations with asynchronous updates

- Conditional logic evaluation throughout the workflow

- Plant “MfgSys” appears to be the primary manufacturing facility

This represents a typical ERP part lookup and data loading sequence, ensuring all related information is available for the user to view or modify the part record.

And for kicks, I asked it to map out events:

ERP Application Event Flow Map

Part Number R1037 Processing Workflow

📋 SESSION START

│

├── 🔧 Debug Logging Enabled (Hotkey 15)

├── 📊 EP-Binding Logging Disabled

│

└── 🔄 UI CLEAR OPERATION

│

├── 🖱️ OnClick_toolClearUI

├── ❓ Confirm_ClearUI (Dialog)

├── 🧹 Execute_ClearUI

│ ├── Clear Dataset: Part

│ ├── Clear Table: KeyFields

│ └── Clear Table: KeyFieldParams

│

└── 🔧 System Initialization

├── SysInitTemplateViews

├── initKeyFields (empty PartNum)

├── Clear Dataset: GlbPart

├── Clear Dataset: PartUOMPlasticTax

└── 🧭 Navigate to "Details" Page

📝 PART NUMBER INPUT & VALIDATION

│

├── 🔍 ColumnChanging_PartNum (User enters R1037)

├── 🔎 SearchForSimilarPart

├── 🌐 API Call: GetPartXRefInfo

│ ├── ✅ Response: Part R1037 found

│ ├── ❌ No serial warnings

│ ├── ❌ No question strings

│ └── ❌ No multiple matches

│

└── ✅ Part Number Validation Complete

🔄 KEY FIELD PROCESSING

│

├── 📊 KeyField_Changed Event

├── 🔍 Validation Checks

│ ├── ✅ Not on landing page

│ ├── ✅ Not read-only

│ ├── ✅ Value length > 0

│ └── ✅ Part not in add mode

│

├── 🔄 PerformUpdate Check

│ └── ❌ No changes detected (return-error)

│

└── 🎯 KeyField_Changed_GetByID

├── 🚫 Disable Details row

├── 💾 Set SysProposedValue: R1037

└── 🌐 API Call: GetByID

📥 PART DATA RETRIEVAL

│

├── 🌐 Execute_GetByID (PartSvc/GetByID)

├── ✅ Response received successfully

├── 🔄 Update_KeyFields_ViewName

│ ├── 🚫 Disable column events

│ ├── 💾 Set KeyFields.PartNum: R1037

│ └── ✅ Enable column events

│

└── 🎉 GetByID_OnSuccess Event

🏭 PLANT & AUTHORIZATION SETUP

│

├── 🌐 InitializeTracker

│ ├── 🏭 AuthorizedPlants: (Plant='MfgSys')

│ └── 🚚 InTransitPlants: (ToPlant='MfgSys' OR FromPlant='MfgSys')

│

├── 🎯 SetPartPlantRowToDefault

│ ├── 🔍 Find current plant row (index 0)

│ ├── 📍 Set current row to plant data

│ └── 💾 SetPartPlantToCurrent: R1037

│

└── 📊 GetRowNumberbyUOM (UOM row #6, not default)

📊 DATA CALCULATIONS & LOADING

│

├── 🌍 CalcPartCOOTotal (Country of Origin)

│ ├── 💾 QtyPercTotal: 0

│ ├── 💾 ValuePercTotal: 0

│ └── 💾 Commit TotalPerc data

│

├── 🖼️ Set_Part_ImageID

│ └── 🌐 API Call: ImageSvc/GetRows

│

├── 📦 CreatePartBinInfoBinList

│ ├── 💾 Initialize empty bin list

│ └── 🔧 Execute join function

│

├── 🌐 GlobalPartEnablement

│ ├── 💾 PartGlobalFields: (empty)

│ └── ❌ Part not flagged as global

│

└── 🔍 GetGlbPartsExist

├── 🌐 API Call: PartSvc/GlbPartsExist

├── ✅ Result: false (no global parts)

└── 💾 GlbLinkActivate: false

💰 COST & INVENTORY DATA

│

├── 💰 LoadPartCostData

│ └── 🌐 API Call: PartCostSearchSvc/GetRows

│

├── 🏷️ GetPartUOMPlasticTax

│ └── 🌐 API Call: PartSvc/GetPartUOMPlasticTax

│

├── 📦 GetBins

│ └── 🌐 API Call: PartBinSearchSvc/GetFullBinSearch

│

├── 📋 GetInventoryAttributeValues

│ └── ❌ No inventory tracking enabled

│

├── 🔧 SetInventoryAttributesFilter

│ └── 📊 Filter: InventoryDynAttrValues

│

├── 🧮 BuildEquation

│ └── 💾 ConversionFactor: "1 BTL * 1 = 1.0000000 LB"

│

└── ⚡ CheckForBackFlush

├── ✅ PartPlant count > 0

├── 🔍 Check BackFlush setting

└── 💾 BackFlush: false

🎯 COMPLETION

│

├── ✅ Enable Details row

├── 💾 Filter.PartNum: R1037

└── 🎉 System Ready for User Interaction

════════════════════════════════════════════

📊 SUMMARY STATISTICS:

• Total API Calls: 8

• Datasets Cleared: 4 (Part, KeyFields, KeyFieldParams, GlbPart, PartUOMPlasticTax)

• Key Data Points Set: 15+

• Plant: MfgSys

• Part Number: R1037

• UOM Conversion: 1 BTL = 1.0000000 LB

• BackFlush: Disabled

• Global Part: No

🔧 TECHNICAL NOTES:

• All REST API calls completed successfully

• No errors or warnings encountered

• Part exists and is valid

• System ready for part management operations

Haven’t done any Kinetic C# in VSCode but hope to try something like this plus Copilot…

One obstacle to AI value in this space, IMO, is the fact that Epicor help is not publicly accessible and code samples not plentiful online. I find it makes things up re: Epicor while it doesn’t for many other tech. Surely this is due to lack of samples, blogs, etc.

If it weren’t for this site, AI wouldn’t know jack about Epicor.

If the corporate plan is to capitalize on this fact, making Prism the only product that ‘knows’, that’s a failed model, IMO.

PS - One drawback to AI is my spelling is slipping ;-|

I don’t recall having to pay for the extension?

Extension is free. GH Copilot sub more more betterer ;-|

| Feature | Free Plan | Pro Plan | Pro+ Plan |

|---|---|---|---|

| Code Completions | 2,000/month | Unlimited | Unlimited |

| Chat Interactions | 50/month | Unlimited | Unlimited |

| Premium Requests (Agent Mode, etc.) | 50/month | 300/month | 1,500/month |

| Model Access | GPT-4o, Claude 3.5 | GPT-4.1, Claude 3.7, Gemini | All models incl. Claude Opus 4 |

| Multi-file Editing | |||

| Custom Instructions | |||

| Voice & Terminal Chat |

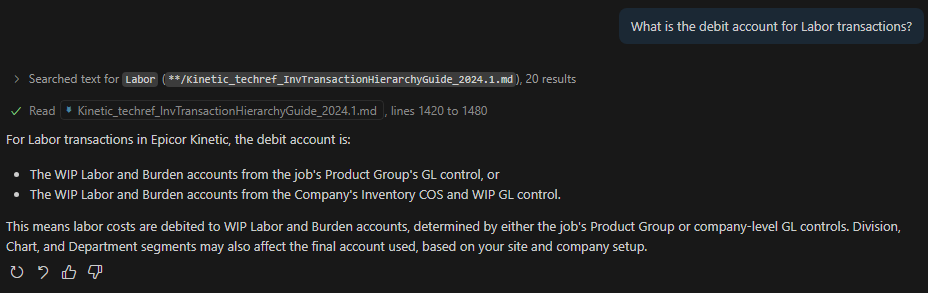

Given enough context, I still think you can get a lot of value. For example, I converted the Kinetic Inventory Transaction Hierarchy Guild to markdown and opened it in VSCode.

I uploaded the context and asked:

It takes a bit of work to overcome that obstacle but it isn’t as bad as it seems.

A few tips that I use (that have been applied to be able to do things like successfully generate a full EFT .cs based on a PDF specification) are as follows:

-

Build out a series of MCP tools that can be used by the models that you are using. These are what truly extend the capabilities of the models into more niche domains (kind of like how having a google search tool allows models with old knowledge cutoff dates to still answer questions about recent events). So as an example of one that I put together: There are XML files in the client directory that contain method signatures for nearly every single method within epicor for every single BO. After building a parser to convert these XML files into a more easy to use format (or stick with the xml files and add some additional info in your system prompt), you have a tool call that determines which BO you are asking about (part of my parsing process is to generate overviews of the BO’s based on the methods that they contain). So the model will have a tool call called “IdentifyTargetBusinessObject” or similar. Then after it knows which BO you are talking about, you can pull either your cleaned / parsed XML BO def into context in its entirety, or have an additional tool call to identify the method that you are asking about. With this information along with some clever prompting (only use c# features up to and including C# 7.2, no new types/classes/methods, only code that can run inside an existing method, declare helper functions as Func<> or Action<>, etc), you can then just feed it traces along with a request to convert it to a function and it works very reliably. Another way of accomplishing the aforementioned functionality differently is having a tool call dynamically pull the open API spec pages from the rest help which also works really well. Sprinkle in a few other tool calls to validate table and field names and you’ll be golden

-

if you just want a bit of auto complete, part of that github action that I shared is to add the extensions that you want included by default in the github codespace, so you can just include github copilot as a dependency and it will auto-install.

-

You can tweak the set up script to include a bunch of code examples (as an alternative to the much more involved step 1) in a default c# file that is always included in the spun up project and copilot will pick it up and use it to guide its suggestions.

-

Build out a single comprehensive prompt that include a series of user requests/answers within it to help guide the model to answer in the format that you want. This makes things much more reliable

As an example:

User: Retrieve sales order records from the last month.

Assistant: Db.OrderHed.Where(o => .....).ToList()

...

More examples

...

User's request:

I’ve had the most success (obvious, I suppose) with option 1, but it also requires the most work…

And hopefully this goes without saying, but NEVER blindly accept code generated by any AI models. Ensure that you fully review, understand, and make changes if the model is giving out garbage (which will happen plenty ![]() )

)

I feel MCP usage should be clarified. It’s a middle-man https server which could be used to orchestrate a single tool group for multiple agents (in different use cases or servers).

If you have 1 code base or 1 use case, tools are able to be called natively from the same application as the AI call. MCP isn’t a requirement, but an extension. It’s like using microservices before you know you even have to scale. Sure, you can, but why?

Context is king. The more context that you can give an LLM, generally, better are the results.

For example, there is an MCP that pulls up-to-date documentation into prompts, which in theory, can reduce hallucinations. This MCP is called Context7.

So yes, we can use MCPs to answer questions for agents, but some have used MCP servers to improve the context of the prompts.

![]()

Tools = MCP

They are the same! MCPs are just remote tools.

In fact, tools like (github/ms) copilot, chatgpt, claude, etc all operate by severely limiting context windows. If you’re going to be making MCPs, you might consider your own agent strategy. Paying for chatgpt teams? 32k context window from the max 400k available by the model on GPT5.

^ MCPs do integrate with all of the above, though. Hence the multiple orchestration mention. It is useful if you don’t make your own tools - but if you start making your own MCPs, you are already there.

edit:

as an example, I have a tool exposed to my AI chatbot called epicor-sql when using the SQL Agent. The AI understands the intent, which tools to call, and provides you a call list. You execute the function and its results are placed into context. o4-mini, o3, claude-sonnet-3.5 and on were the best models for this. GPT 5 reasoning is probably the best now.

No MCP needed, I used Azure Relay to get the data from our servers behind infra to the public server hosting the AI chat. The AI used local tools to make those calls.

Correct, you don’t need MCP. But, building context retrieval tools that reside outside of an MCP server that are constrained to a specific providers response format or require tweaking to utilize with other applications is not fun to maintain in my experience. So, building the MCP servers along with those specific context retrieval tools to be able to use with any client that understands that protocol (there are lots ![]() ) is worth mentioning. Regardless, to anyone wishing to get the most out of their experience when working on subjects that were not prevalent in the training data of these LLMs: context is one of the most important things to use when aiming for the most accurate responses. Whether you provide that context through MCP, or by pasting a bunch of info into your request to the LLM, it does not matter as long as you provide context to the LLM (especially when dealing with topics of scarce training data such as epicor development). Hopefully that clarifies why I specifically used the term MCP tools in my original post.

) is worth mentioning. Regardless, to anyone wishing to get the most out of their experience when working on subjects that were not prevalent in the training data of these LLMs: context is one of the most important things to use when aiming for the most accurate responses. Whether you provide that context through MCP, or by pasting a bunch of info into your request to the LLM, it does not matter as long as you provide context to the LLM (especially when dealing with topics of scarce training data such as epicor development). Hopefully that clarifies why I specifically used the term MCP tools in my original post.