I have been building a BAQ in my “free time” to utilize for audit log information. So far I have only been able to do single queries, such as the “Order Release Audit Log Warehouse Changed Query”. Instead of having multiple individual queries parsing that audit log looking for a specific field change, I would like one BAQ with parameters for things like TableName, DateChanged, User, FieldName. I figured I would post to see if anyone here was successful in doing this already so I am not reinventing the wheel on my side… slowly… painfully… TIA!

I’m not 100% sure what you are trying to do, but if you are looking for triggers to show certain rows, I usually do a calculated field to look for that condition and return true if it qualifies. Then you can use the subquery criteria tab to filter (using or’s) if any of the calculated fields are true.

You might have to do sub queries to do this as well, depending on how complicated your criteria is.

EDIT: Nevermind me, I think I had a bad dream.

I’m interested in a solution. Seems like you would need a Query for each individual table as the change log is not stored in a single table. Or daisy chain them together I supposed.

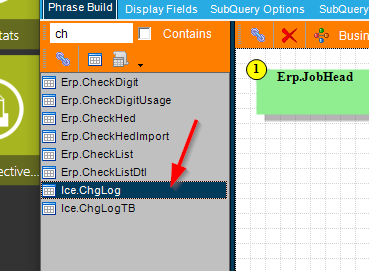

The change log is a single table.

I have done several “tricks” to filter out change logs, but they typically are looking for certain values. The ChgLog table has several important columns, and i typically look at the following columns:

Then will add a PARAMETER to the BAQ to ask which table name you are interested in, UserID, as well as a date range. I apply those filters to reduce the data… then you can add one additional “key word” filter to find data in the Log Text I am attempting to find. but again, this can all depend on your needs.

I know of one customer who actually made a special version of a query that selected data from OrderDtl change logs, and parsed it out to show From/To values for Part, Price, and Quantity… but this took some special coding and only worked for that one table. This is because the LogText field is simply that… a big text field that flows lots of data if you are tracking lots of field changes.

I have created a SQL script over a year and a half ago that I have not really touched since. This parses out the LogText column into multiple columns and rows. I am sure there are some things I would have done differently and I have not tried to figure out how to create a BAQ from this script. If someone does happen to create a BAQ from this, I would want it if you are willing to share.

--drop table #split_row;

--drop table #parsed_userLine;

--drop table #parsed_chgDtl;

declare @i int

declare @temp_id int

declare @temp_str1 nvarchar (max)

declare @temp_str2 nvarchar (max)

declare @temp_str3 nvarchar (max)

declare @temp_expr nvarchar (max)

declare @temp_bit bit

declare @numrows int

create table #split_row (

splitId int Primary Key IDENTITY(1,1),

value nvarchar(max),

chgLog_sysRowId char(36)

)

create table #parsed_userLine (

parsedId int Primary Key IDENTITY(1,1),

userId nvarchar(75),

timeChgd nvarchar(75),--time,

newRecord bit,

chgLog_sysRowId char(36)

)

create table #parsed_chgDtl (

userLine_parseId int,

chgType nvarchar(30),

currentValue nvarchar(max),

newValue nvarchar(max)

)

--insert into #split_row

insert into #split_row

Select split.value, ice.ChgLog.SysRowID

--into #split_row

from ice.ChgLog

cross apply String_split ( trim ( ice.ChgLog.logtext ), char(10) ) as split

where ice.ChgLog.Identifier = 'orderhed' --*************************You can add other criteria to spend this up with items such as datestampedon, etc

order by ice.ChgLog.DateStampedOn desc

--select * from #split_row where value like '[a-z]%[0-2][0-9]:[0-5][0-9]:[0-5][0-9]%New Record%'

set @i = 1

set @temp_id = 0

set @numrows = ( select count(*) from #split_row )

while ( @i <= @numrows )

begin

set @temp_bit = 0

set @temp_str1 = NULL

set @temp_str2 = NULL

set @temp_str3 = NULL

set @temp_expr = NULL

if ( ( select value from #split_row where splitId = @i ) like '[a-z]%' )

begin

--print 'UserID Action at row,' + ltrim ( str (@i) )

set @temp_expr = ( select value from #split_row where splitId = @i )

set @temp_str1 =

SUBSTRING (

@temp_expr,

1,

case when CHARINDEX ( ' ', @temp_expr ) > 0

then CHARINDEX ( ' ', @temp_expr )

else LEN ( @temp_expr )

end

)

set @temp_expr =

ltrim (

SUBSTRING (

@temp_expr,

CHARINDEX ( ' ', @temp_expr ),

LEN ( @temp_expr )

)

)

--print 'Expression:' + @temp_expr

set @temp_str2 =

case

--when 0 then '0 spaces in User Detail Row'

--when 1 then '1 spaces in User Detail Row'

--when 2 then '2 spaces in User Detail Row'

when ( select count(*) from string_split ( ( select value from #split_row where splitId = @i ), ' ' ) ) >= 2

then --'3 spaces in User Detail Row'

SUBSTRING (

@temp_expr,

1,

8

)

--else

--begin

--'Expression:' + @temp_expr

--'Num of spaces:' + str ( ( select count(*) from string_split ( ( select value from #split_row where splitId = @i ), ' ' ) ) ) + '-Expression:' + @temp_expr

end

if ( @temp_expr like '[0-2][0-9]:[0-5][0-9]:[0-5][0-9]%New Record%' )--( select count(*) from string_split ( rtrim ( @temp_expr ) , ' ' ) ) >= 2)

begin

set @temp_expr = --WORK ON FORMAT

ltrim (

SUBSTRING (

@temp_expr,

CHARINDEX ( ' ', @temp_expr ),

LEN ( @temp_expr )

)

)

--print 'Expression:' + @temp_expr

set @temp_bit = 1

end

--set @temp_str3 = cast (#split_row.chgLog_sysRowId as char(36) )

INSERT INTO #parsed_userLine ( userId, timeChgd, newRecord, chgLog_sysRowId )

VALUES ( @temp_str1, @temp_str2, @temp_bit, ( select chgLog_sysRowId from #split_row where splitId = @i ) )

set @temp_id += 1

end

else

begin

if ( ( select value from #split_row where splitId = @i ) like ' %' )

begin

--print 'Change Detail Action at row,' + ltrim ( str (@i) )

set @temp_expr = ( select value from #split_row where splitId = @i )

set @temp_str1 =

trim (

SUBSTRING ( @temp_expr, 1, CHARINDEX ( ':', @temp_expr ) - 1 )

)

set @temp_expr = SUBSTRING ( @temp_expr, CHARINDEX ( ':', @temp_expr ) + 1, LEN ( @temp_expr ) )

set @temp_str2 =

trim (

SUBSTRING ( @temp_expr, 1, CHARINDEX ( '-', @temp_expr ) - 1 )

)

set @temp_expr = SUBSTRING ( @temp_expr, CHARINDEX ( '>', @temp_expr ) + 1, LEN ( @temp_expr ) )

set @temp_str3 =

trim (

SUBSTRING ( @temp_expr, 1, LEN ( @temp_expr ) )

)

--print 'Expression:' + @temp_str3

INSERT INTO #parsed_chgDtl (userLine_parseId, chgType, currentValue, newValue )

VALUES ( @temp_id, @temp_str1, @temp_str2, @temp_str3 )

end

--else

--begin

--if ( ( select value from #split_row where splitId = @i ) like '' )

--begin

--print 'Empty at row,' + ltrim ( str (@i) ) --select value from #split_row where splitId = @i

--end

--end

end

set @i += 1

end

--select * from #parsed_userLine as userLine where userLine.newRecord = 1 --inner join #parsed_chgDtl as chgDtl on userLine.parsedId = chgDtl.userLine_parseId

Select

ChgLog.Company, --parsed.*--, parsed.value

ChgLog.Identifier,

ChgLog.TableName,

ChgLog.Key1 as OrderNum,

ChgLog.Key2 as OrderNum_Line_Rls,

ChgLog.DateStampedOn,

ChgLog.LogNum,

ChgLog.LogNum,

ChgLog.UserID,

(SUBSTRING(ChgLog.Key2, 0,

(case when CHARINDEX('~', ChgLog.Key2) > 0

then CHARINDEX('~', ChgLog.Key2)

else LEN(ChgLog.Key2) + 1

end)

)) as OrderNum_Calc,

(case when ChgLog.Key2 like '[0-9]%~[0-9]%'

then

SUBSTRING( SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2)), 0,

(case when CHARINDEX('~', SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2))) > 0

then CHARINDEX('~', SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2)))

else LEN(SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2))) + 1

end)

)

end) as OrderLine_Calc,

(case when ChgLog.Key2 like '[0-9]%~[0-9]%~[0-9]%'

then

SUBSTRING(SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2)), CHARINDEX('~', SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2))) + 1, LEN(SUBSTRING(ChgLog.Key2, CHARINDEX('~', ChgLog.Key2) + 1, LEN(ChgLog.Key2))))

end) as OrderRls_Calc,

userLine.userId as userID_LogTxt,

userLine.timeChgd,

userLine.newRecord,

chgDtl.chgType,

chgDtl.currentValue,

chgDtl.newValue

from ice.ChgLog as ChgLog

inner join

#parsed_userLine as userLine

on ChgLog.SysRowID = userLine.chgLog_sysRowId

inner join

#parsed_chgDtl as chgDtl

on userLine.parsedId = chgDtl.userLine_parseId

--cross apply

-- String_split( ice.ChgLog.logtext, char(10) )

-- as parsed

where ChgLog.Identifier = 'orderhed' and ChgLog.DateStampedOn like '2019-%' --**************You can add or replace criteria to improve searching here as well

order by ChgLog.DateStampedOn desc

drop table #split_row;

drop table #parsed_userLine;

drop table #parsed_chgDtl;