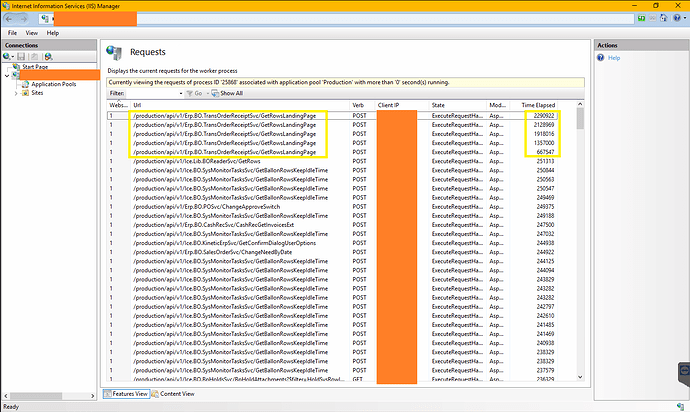

I’m at my wit’s end. For the last month I’ve had intermittent system-wide freezes in Kinetic where no one could work until I did an IISRESET on the app server. Like once every week or two.

Well, yesterday it happened 4 times and I just did a reset again today. It’s been rough all week. RAM and CPU always look fine on the app server.

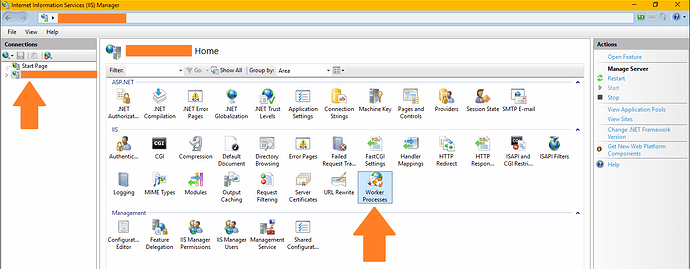

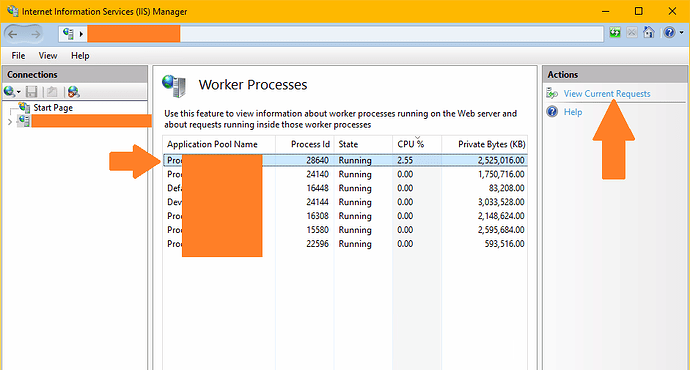

I have tried to at least isolate the issue. Here is how - I have 5 app pools (and 5 matching “application servers” in EAC):

- Our Task Agent is on its own app pool on a different server. This never crashes.

- Our integrations have their own app pool. No issues there.

- EKW has its own app pool. Again, no issues there.

- Starting yesterday afternoon, I put myself and my sysadmin coworker on our own app pool. No problems for us either.

- But all other human users that log in through a browser are the ones that keep seeing system freeze-ups.

App pool 1 (task agent) is on the SQL server and 2-4 are on the app server.

When the user app pool (#5) freezes, it does not affect the others. I can keep working in one of the other app pools if I want. I’m often oblivious to the users’ struggles with slowness and freezing.

So, I’m not sure what else to try. Support has not helped. I guess to be honest, I have not rebooted the server…

Another question I had - at what layer do BPMs live? On the app pool? In the database? In the task agent?